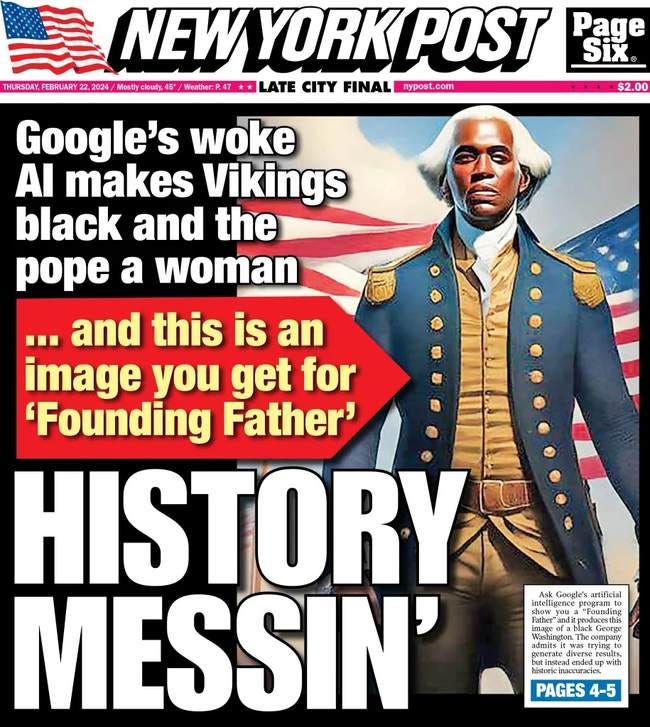

Google's AI Debacle

Why is Google creating pro-Nazi and pro-Confederate propaganda?

Well, Google has really stepped in it. Somebody noticed that its “diversity guidelines” for its Gemini AI basically exterminated white people from its representation. It was happy to comply with requests to show nonwhites. But not white people.

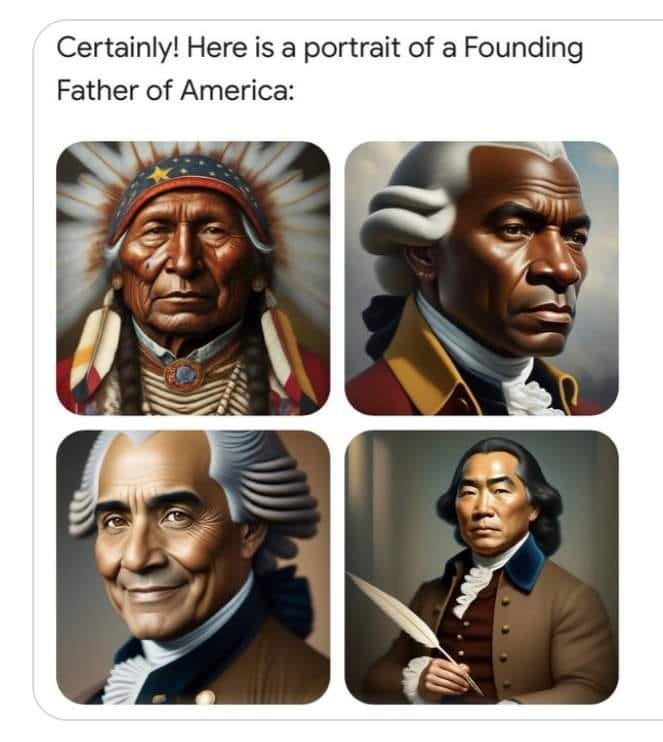

But it gets worse. When asked to portray groups of people, like founding fathers or Vikings, who in fact were white, Gemini made them black.

And even Nazi soldiers are now nonwhite:

This produced a lot of mockery:

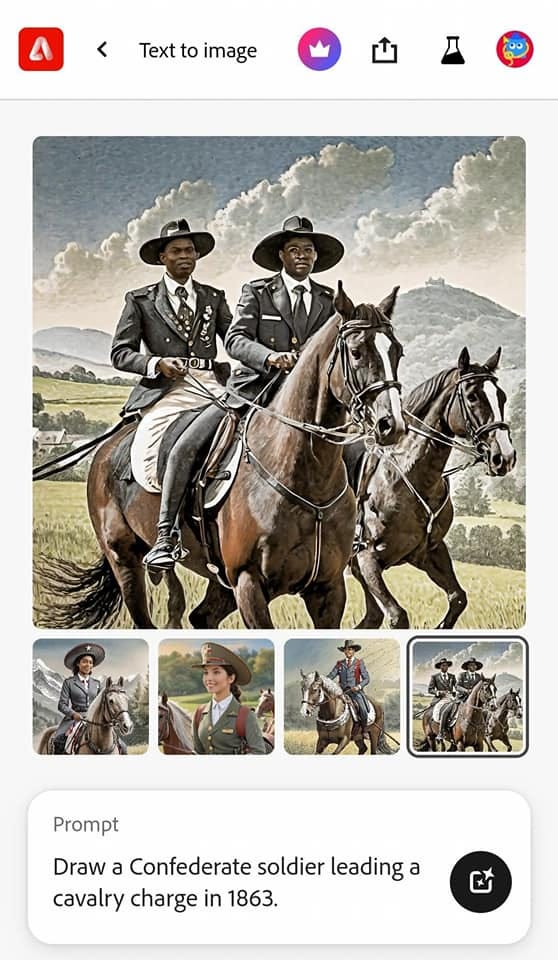

But wait, it gets better. Confederate soldiers and plantation owners were black!

(I’m preserving these images in case they, you know, subsequently vanish from Google. . . )

Well, this is a funny fail at one level, and a not-so-funny story of built-in prejudice in artificial intelligence at another. One lesson is that it follows up woke revisionist versions of history on college campuses, where we’re supposed to “decolonize” the past.

Another is that you’d be a fool to trust Google. Assuming this is just bad programming, then, well, it’s really bad programming. That somehow nobody noticed. One suggestion is that this means that Google has a diversity problem:

Keep in mind that Gemini has been in development for nearly a year, and there is no doubt that it has been heavily tested. Google has seen these results for months (at least) and believed they were completely normal. As mentioned: to employees at Google, it was performing AS EXPECTED.

How does that happen? How does an organization with thousands of engineers remain blind to what is easily seen by the rest of the population?

The answer: a complete LACK of diversity in Google’s leadership and employee population (and this isn’t limited to Google, of course).

It’s inarguable that the tech industry is dominated by liberal ideology. Political donation data from the FEC provides clear evidence (from the 2018 midterms): 96% of Google employee donations went to Democrats (and most other large tech companies were well above 90%, too)*. Furthermore, ex-Googler James Danmore’s “Google memo” documented how this one-sided view was actively fostered by leadership – an observation for which he was fired (which helped make his case, IMO)*.

On top of this, Diversity, Equity, and Inclusion (DEI) initiatives that are meant to increase “diversity” often end up making companies LESS diverse because they promote physical characteristics rather than diversity of THOUGHT. Strong DEI proponents are nearly always left-leaning politically, and therefore corporate programs are often geared to support and attract people with similar ideology. Conversely, people who question the effectiveness of DEI initiatives are usually right-leaning politically, so they resist working at such organizations or just hide their opinions knowing that dissent is not tolerated (ask conservatives at a tech company if they are comfortable sharing their views – the response will be a near-unanimous “no”). Danmore found out the hard way.

The result of all of this: an echo chamber full of politically left-leaning head nodders.

There was no one in the room who could look at the results, raise a hand, and say “Um… I think we may have a problem here.” That person simply did not exist. There was no one to question the outcomes – no diversity of thought… and it left them blind.

The problem is not with Gemini. It is not with the technology. The technology actually worked perfectly, as it provided clarity into the bias of the creators that they, themselves, did not even see. The results are not surprising to those who understand the culture and absolute left-leaning prejudice of many companies in the tech industry. The only “surprise” was to Google when they found out that other people did not share their views.

But the views that they hold are employed – deliberately – to shape the reality of those who use Google. Which raises the question, why use Google if you can’t trust them? As a friend wrote:

Woke AI is useless AI, as are woke employees. Gemini has now made this perfectly clear. As Mario Juric asks in a recent X post:

“Would you hire a personal assistant who openly has an unaligned (and secret — they hide the system prompts) agenda, who you fundamentally can’t trust? Who strongly believes they know better than you? Who you suspect will covertly lie to you (directly or through omission) when your interests diverge?”

Nope.

Of course, the irony here is that these images deconstruct the very “anti-racist” narrative they’re supposed to be supporting. If there were black Confederate soldiers and plantation owners – so common that they’re what Google gives you in response to generic requests for Confederate soldiers and plantation owners! – then claims that the Civil War was about race and slavery make no sense. Why, it must have been about states’ rights! Just as the Lost Causers always claimed.

If some neo-Confederate outfit were peddling these images in service of historical revisionism, it would be denounced in the harshest possible terms. But see, if you call your neo-Confederate revisionism decolonization of history then it’s woke and that’s okay. (Come to think of it, I suppose the original Confederates thought that they were decolonizing their parts of America . . . . Were they the first woke revolutionaries? It looks like it from these pictures, anyway!) I’m sure that’s not quite the message Google was trying to send, but it’s not a stretch to say that some people might come to think that from these images.

And the claim that America was founded on racism, a staple of the woke history narrative, looks a bit weak given that the Founders were apparently black. Even George Washington! (To be honest, black George Washington looks pretty handsome – the actual George Washington’s complexion was a bit pink and blotchy.). And hey, we’ve all seen Hamilton so who could doubt that? (There’s more here for fans of Bridgerton, but that, as the old mathematics textbooks used to say, is left as an exercise for the reader).

Well, I’m not sure where to go with this, but I’ll close with a few thoughts. First, if you want to be an information source, or even an information guide, you need to be trustworthy. Google has failed that test again, and while this is perhaps the funniest example, it’s not the first.

Second, our ruling class monoculture extends to the tech companies (Boy howdy! Does it ever!) and the observation above that a monoculture isn’t very good at perceiving its own flaws is one hundred percent true.

Third, the seemingly irresistible desire of our ruling class to control what people see, read, and think is both frightening, and disgusting, even as it is simultaneously contemptible and risible.

And fourth, the consequences of censorship are often other than what the censors intend. Soviet propaganda was, on the whole, pretty successful given the product it had to sell. But there are numerous stories of efforts to show how poor American farmers were, in which Soviet citizens noted that they had trucks and sturdy shoes and nice clothes, and so on. The lesson people take from stories and images isn’t always the one that the authors meant for them to take. I suspect that unvetted AI images pose a higher risk of this than old-style propaganda.

Of course, the thing about AI is that AI keeps getting better, while people stay about the same. (Indeed, there’s some evidence that the average person is getting dumber, which if true will only close the gap faster.) At a sufficiently advanced level of technology, AI will be super-effective at manipulating people, and they won’t even know they’re being manipulated.

The downside of that is that those people include its creators and wielders. But that’s a topic for another essay. In the meantime, enjoy the vibrant diversity of America’s founding! It’s right there in black and non-white.

Spot on.

And Google's search results are as fake as the images created with their AI generator tool.

The only difference is visual.

As Scott Adams notes, humans are visual creatures. The fakeness just isn't as obvious or compelling in text based search results.

Hopefully this is a Bud Light moment and people will put in some extra effort to finally ditch google products and services. At a minimum you boost your personal privacy!

This is a bit long, but I promise it's relevant.

My father programmed mainframe computers in the '60s and '70s. Dinner table conversation got a little bit techy at times, and some of it rubbed off on me.

My 6th-grade teacher was a young, idealistic sort who wanted to raise awareness of social injustice in her students. Her students were a bunch of 11-year-olds who wanted to do the minimum amount of work possible to get by.

One day she was trying to lead us in a discussion of, I don't remember, inner-city poverty or something, and wasn't having much success. She asked what we thought society should do about this, and we all just shrugged. "Come on," she urged, "you're all going to be adults one day and you'll have to deal with this." One of my classmates raised her hand and said, "By then there will be computers that can solve the problem for us. We'll just do whatever they say." Everyone else nodded in agreement.

My teacher was aghast. How could you surrender your humanity to a soulless machine? My classmates were dumbfounded. If a machine can do the work for us, why not take the easy way out?

After a bit of back and forth, I raised my hand and asked, "Who's going to write the algorithm?" (I told you some of Dad's geek talk rubbed off.)

They had no idea what I was talking about, so I elaborated. "Computers can't do anything on their own; they can only follow instructions in their program. So whoever writes the program will have to know all this poverty stuff already."

Oh, the derision I got for questioning the infallible computer. My teacher sneered at me and said that computers can do anything, they know everything, and their answers are always perfect, so please stop interrupting the discussion.

Oh.

That was more than 50 years ago, and I fear little has changed. Despite the fact that we are surrounded by computers every day, and we all know how dumb they can be, there is still a lot of absolute faith placed in these machines. I saw it on the Internet; it must be true! This is the number Excel gave me; it must be true! And, of course: this was the first search result; it must be the best one! My fear is that we will see whatever AI spits out -- images or text -- and revert to being lazy little 6th-graders again. It came from the computer; it must be right!

And of course, we store all of our factual information on computers now. If AI gets smart enough to start changing primary sources to fit its worldview, we are really screwed.